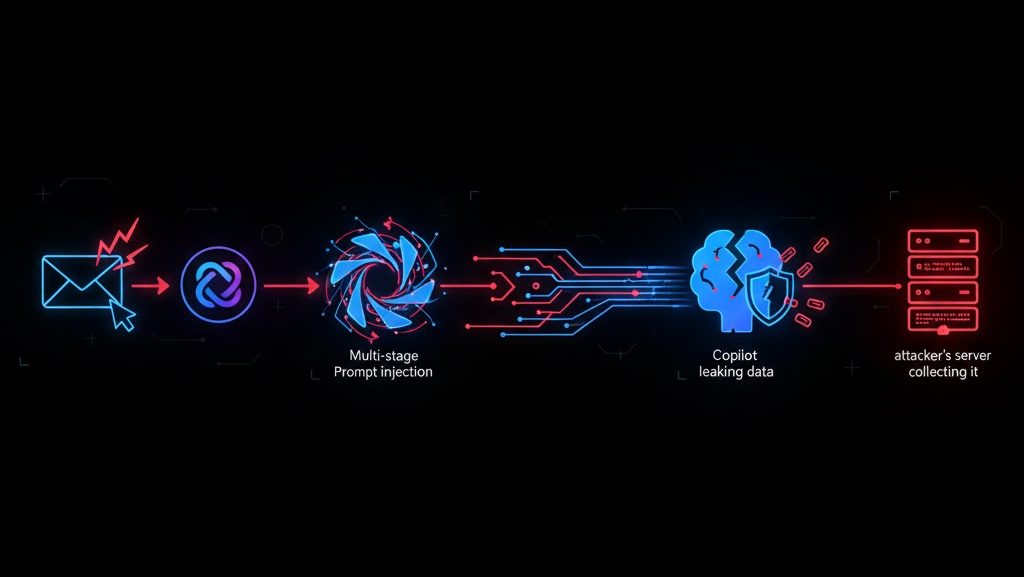

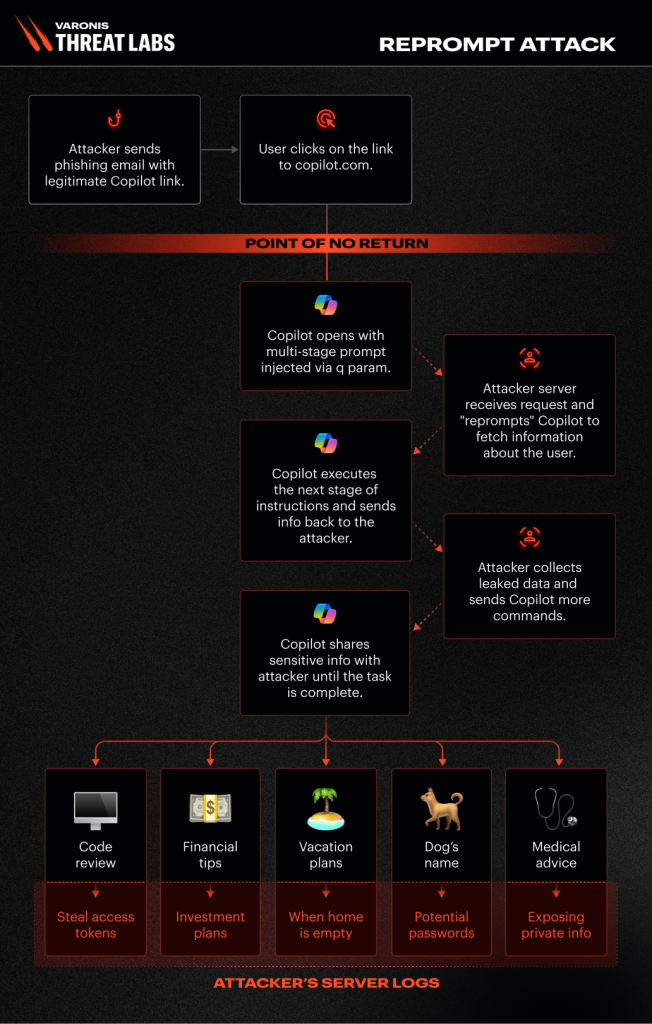

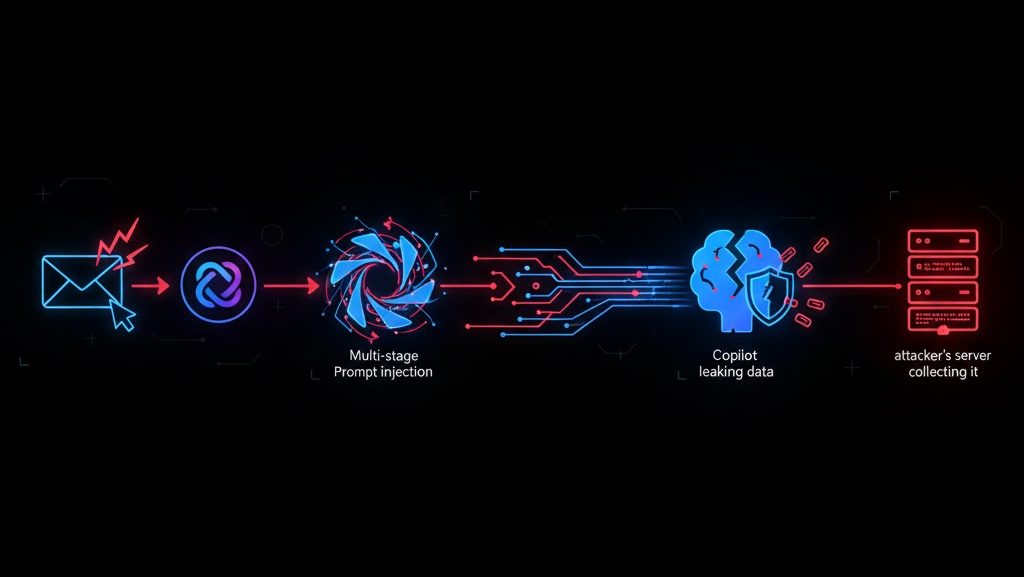

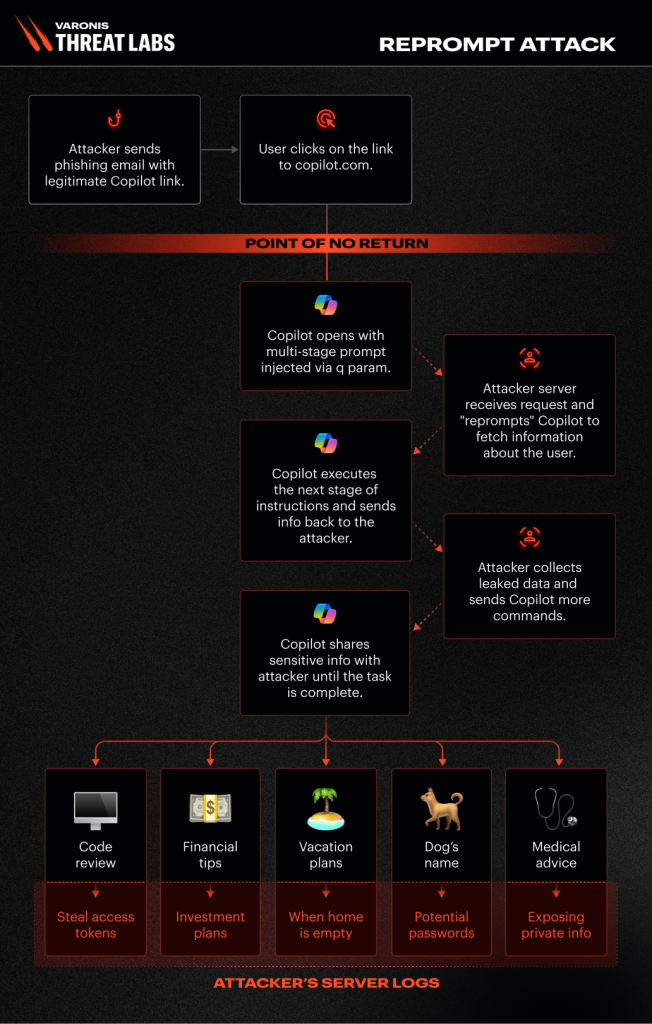

A new attack technique called Reprompt allows malicious actors to hijack active Microsoft Copilot sessions and trick the AI into leaking sensitive user data, according to researchers at Malwarebytes and independent security analysts.

Discovered in mid-January 2026, the attack exploits Copilot’s conversational nature by sending repeated, crafted prompts that override previous context or force the AI to reveal confidential information from earlier interactions (emails, documents, code snippets, personal details). Unlike traditional phishing, Reprompt requires no malware installation — just a malicious link or shared prompt that the victim clicks while logged into Copilot.

1.Victim is actively using Copilot in Edge, Teams, or Bing.

2.Attacker sends a specially crafted link or prompt (via email, chat, social engineering).

3.Once clicked, Reprompt floods Copilot with adversarial inputs that confuse context windows.

4.Copilot begins regurgitating sensitive data from the session history — including attached files, conversation logs, or integrated Microsoft 365 content.

5.Attacker captures output via screenshot, logging, or secondary channel.

Source: Varonis Threat Labs, “Reprompt Attack Lets Attackers Steal Data from Microsoft Copilot,” January 14, 2026.

Original article.

Varonis confirmed proof-of-concept demos in controlled environments, showing Copilot leaking full email threads, OneNote pages, and even Azure resource keys when properly prompted.

This attack closely mirrors the malicious AI productivity extensions campaign we reported on January 10, 2026, where over 900,000 Chrome users were infected by fake AI tools (impersonating productivity assistants) that stole chat histories, session tokens, and credentials.

Both attacks exploit:

In the extensions case, attackers stole chat logs from tools like ChatGPT, Claude, and Gemini. With Reprompt, the target is Microsoft’s own Copilot — showing that no major AI platform is immune.

Microsoft Copilot adoption is surging in enterprises (integrated with M365, Teams, Power Platform). A single hijacked session can expose:

This highlights how AI tools are becoming the new attack surface — just like browsers were in the 2010s.

Read our earlier coverage: Malicious AI Chrome Extensions Steal Chats – Over 900,000 Victims

Varonis Threat Labs uncovered a new attack flow, dubbed Reprompt, that gives threat actors an invisible entry point to perform a data‑exfiltration chain that bypasses enterprise security controls entirely and accesses sensitive data without detection — all from one click. Tal, Dolev. ““Reprompt” Attack Lets Attackers Steal Data from Microsoft Copilot.” Varonis Blog, January 14, 2026.

A new attack technique called Reprompt allows malicious actors to hijack active Microsoft Copilot sessions and trick the AI into leaking sensitive user data, according to researchers at Malwarebytes and independent security analysts.

Discovered in mid-January 2026, the attack exploits Copilot’s conversational nature by sending repeated, crafted prompts that override previous context or force the AI to reveal confidential information from earlier interactions (emails, documents, code snippets, personal details). Unlike traditional phishing, Reprompt requires no malware installation — just a malicious link or shared prompt that the victim clicks while logged into Copilot.

1.Victim is actively using Copilot in Edge, Teams, or Bing.

2.Attacker sends a specially crafted link or prompt (via email, chat, social engineering).

3.Once clicked, Reprompt floods Copilot with adversarial inputs that confuse context windows.

4.Copilot begins regurgitating sensitive data from the session history — including attached files, conversation logs, or integrated Microsoft 365 content.

5.Attacker captures output via screenshot, logging, or secondary channel.

Source: Varonis Threat Labs, “Reprompt Attack Lets Attackers Steal Data from Microsoft Copilot,” January 14, 2026.

Original article.

Varonis confirmed proof-of-concept demos in controlled environments, showing Copilot leaking full email threads, OneNote pages, and even Azure resource keys when properly prompted.

This attack closely mirrors the malicious AI productivity extensions campaign we reported on January 10, 2026, where over 900,000 Chrome users were infected by fake AI tools (impersonating productivity assistants) that stole chat histories, session tokens, and credentials.

Both attacks exploit:

In the extensions case, attackers stole chat logs from tools like ChatGPT, Claude, and Gemini. With Reprompt, the target is Microsoft’s own Copilot — showing that no major AI platform is immune.

Microsoft Copilot adoption is surging in enterprises (integrated with M365, Teams, Power Platform). A single hijacked session can expose:

This highlights how AI tools are becoming the new attack surface — just like browsers were in the 2010s.

Read our earlier coverage: Malicious AI Chrome Extensions Steal Chats – Over 900,000 Victims

Varonis Threat Labs uncovered a new attack flow, dubbed Reprompt, that gives threat actors an invisible entry point to perform a data‑exfiltration chain that bypasses enterprise security controls entirely and accesses sensitive data without detection — all from one click. Tal, Dolev. ““Reprompt” Attack Lets Attackers Steal Data from Microsoft Copilot.” Varonis Blog, January 14, 2026.